Finding an Audience to Fix Flaws

•

Infosec conferences are a great venue for sharing tools, techniques, and tactics across a range of security topics from breaking systems to building them. Not only are they a chance to learn from peers, but to meet new ones and establish connections with others who are tackling similar problems. One eternal topic is the “shift left” motto — building security into the SDLC as early as possible.

One way to shift left is to make sure developers aren’t left out of the conversation. Not every dev team has the budget to attend security conferences and quite often security conferences are attended by practitioners who aren’t building software as part of their daily work. I’ve attended many security conferences (and enjoy them!), but I’ve also wanted to find conferences that are oriented towards developers in order to bring an appsec message to them rather than expect them to discover security by chance.

This week I had the opportunity to present at the Star West developer conference. My presentation was about building metrics around the time and money invested in finding vulns within apps. It pulls data from real-world bounty programs and pen tests. It’s not hard to determine that finding vulns is important. But it can be hard to figure out when the time and money spent on finding them is well spent and when those investments could be directed to other security strategies.

A few points of the presentation were

-

Always strive to maintain an inventory of your apps, their code, and their dependencies. This is easier said than done, but it’ll always be a foundational part of an appsec program.

-

Find metrics that are meaningful to your program. For example, if you’re running a bounty program, when would it make more sense to attract more participants vs. engage the most prolific vuln reporters? If you’ve never conducted any security testing against an app, should you start with a pen test or a bug bounty? How might that choice affect your appsec budget?

-

Organizations and apps vary widely. Resist trying to compare your own metrics to other programs whose context, assumptions, and environments don’t match yours. Instead, follow your own metrics over time in order to observe trends and whether they’re influenced by your security efforts.

Although the presentation begins with data related to finding vulns, it doesn’t forget that fixing vulns is what contributes to making apps more secure. Regardless of how you’re discovering vulns, your DevOps team should be fixing them. Which also means you should have some metrics around that process.

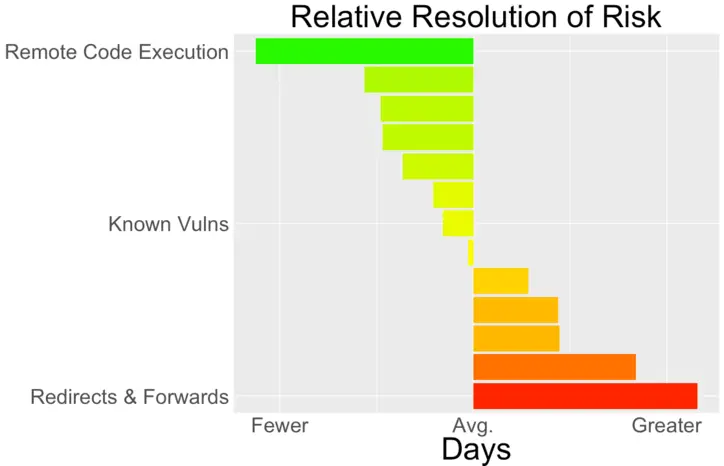

One of the ways I looked at this data was in the relative time it takes organizations to fix different categories of vulns. Rather than ask how long it takes to fix all vulns, I thought it’d be interesting to see how quickly different types of vulns are fixed relative to each other.

In the following chart I took the average time to fix all vulns, then compared different categories against that average. In this case, it wasn’t too surprising to see that Remote Code Execution stood out as requiring fewer days than average to fix — it’s typically a high impact vuln that puts an app at significant risk of compromise. On the other hand, Redirects & Forwards took a longer time to fix. One theory could be that the greater number of days is related to the relatively low risk of such vulns and don’t need immediate attention. Another theory could be that such vulns are more difficult to fix due to nuances in allowing certain types of redirects while disallowing others. Knowing that Redirects & Forwards dropped off the OWASP Top 10 list in the recent 2017 update lends additional support to the idea that these are lower risk vulns.

In any case, having metrics puts us on the path to data visualization. These steps enable us to start answering initial questions about the state of an app’s security. And then gives us a chance to ask follow-up questions about whether processes are working or whether we have blind spots in the data we’d like to have.

Appsec doesn’t happen in a vacuum. There’s a big difference between lamenting a perceived lack of security awareness among developers and engaging them on security issues that are relevant to their work. In addition to being relevant, the message should be constructive. Adding metrics to the discussion helps illuminate when efforts are successful, where they can be improved, and where more data is needed. Include the DevOps team as active participants in developing questions and metrics. Audience participation is a great way to build better appsec.